Blind people and the World Wide Web

1 Blind people and the World Wide Web

Perhaps you've read a book recently? Perhaps when you finished you picked up a newspaper and got the sports headlines, or went online and surfed some travel sites to book next year's summer holiday? Your local newsstand easily has a hundred newspapers and magazines. If you have web access, you have billions of sites available to you. Unless, of course, you're blind, when accessing printed or net resources suddenly becomes a very different proposition.

Traditionally, blind people have had only limited means of accessing printed material. Braille is the most famous access method, but only a tiny proportion of blind people can read Braille - some 2% in the UK. Recent years have seen the wider adoption of audio recordings, but like Braille these suffer from a lack of immediacy - you want the news today, not to wait a week for it to be translated - and a blind user is usually reliant on sighted people, often volunteers, to produce the material. This reliance and the higher costs of producing alternative format materials such as audiotapes necessarily reduce the material available. This is a poor comparison with what is available to sighted users and their choice of material.

The rise of affordable personal computing in general and the Internet in particular promised an incredible improvement in access to written materials. With a personal computer, some easily-available technology, and a web browser, you are no longer restricted to tapes sent through the post or the passive technology of the radio: you now have access to billions of web pages, personal, corporate, educational, entertaining, all available from your home. And there is no better time for this huge revolution: the great majority of blind people in developed countries become so because of the effects of age. With average life expectancies increasing the number of potential blind Internet users grows and grows. There are some one million registered visually-impaired people in the UK, of whom 750,000 are over 75. They want access to the same material they've always had, whether it's the London Times or the National Enquirer, but the material may not be available in an alternative format. Relying on what other people choose to translate for your benefit reduces your choice and freedom. Besides, sighted people have taken to the Internet in their millions for booking holidays, researching family trees and countless other uses: blind people need the same opportunities, and the technology makes it possible.

This is not to say, alas, that the web is a happy land where a blind person can surf and browse with all the freedom and ease of a sighted person. To understand why, we need to examine how blind people access computers in the first place.

2 How blind people access computers

The last decade has seen the triumph of the rich graphical desktop, replete with colourful icons, controls and buttons all around the screen, controlled by the mouse pointer moving about the screen clicking and dragging. This is not, on the face of it, a usable environment for blind people, but use it they must.

Many people with a significant visual impairment have some degree of residual vision. There are assistive technology solutions for them: a screen magnifier application, such as ZoomText from Ai Squared, magnifies a small area of the display, potentially filling the entire computer screen. The user can move the area being magnified around the desktop. This allows the user to control the computer interface directly, and is a good solution for people with gradually-degrading vision, especially those who are already familiar with their computer interface but are starting to have trouble seeing it. However, for those with a significant visual impairment or complete blindness, there are different two options.

The first is to use a screen reader. This is an application that attempts to describe to the blind user in speech what the graphical user interface is displaying. It turns the visual output of the standard user interface into a format that is accessible to a blind user. In practice this means driving a Braille output device - a row of Braille cells with mechanical pins that pop up and simulate Braille characters under the user's fingers - or, more commonly, a text-to-speech synthesizer. We will deal exclusively with these text-to-speech users in the rest of this article because they form the great majority of users, actual and potential. The screen reader acts almost as a sighted companion to the blind user, reading out what is happening on the screen - popup boxes, command buttons, menu items, and text. Ultimately screen readers have to access the raw video output from the operating system to the screen and analysing it for information that should be presented to the user. This is a complex process, as you would expect from an application that is attempting to communicate the complicated graphical user interface in a wholly non-visual way. There are many screen readers available, including JAWS from Freedom Scientific, Window Eyes from GW Micro, or Thunder from Screenreader.net. If you have Windows 2000 or XP, you'll find that Microsoft have included a basic screen reader in the operating system, called Narrator: try activating it, opening Notepad and typing some text or checking your email without looking at your screen.

The goal of a screen reader is to make it appear to the user as if the current application was itself a talking application designed specifically for blind users. This is difficult to accomplish. Applications often have particular user controls or methods of operation that must be supported by the screen reader. For example, a spreadsheet program operates very differently from an email client. This forces screen reader developers to adapt their programs to support specific applications, typically the market leaders like Microsoft Word. It also means that applications that utilise simple interface components like menus and text boxes will work best with screen readers. Those with non-standard interface components like 3D animations may be difficult for a screen reader to access.

The second way for a blind person to use a computer is to take advantage of self-voicing applications. These are usually applications written specifically for blind people that provide their output through synthesised or recorded speech. The obvious advantage is that the application designer can ensure that what is communicated to the user is exactly what the designer wants communicated - although this assumes that the designer's conception of what the user needs or wants to hear is correct! Aside from the extra design and development required to produce a self-voicing application, the main drawback is that the application cannot be used at the same time as the user's screen reader. If the application usurps the screen reader, the user's customary interface to the computer, it takes upon itself the responsibility for being at least as comfortable and usable for the user as their screen reader. Users become accustomed to their particular screen reader and its operation and will have it configured just as they want it. The hotkeys of a self-voicing application may be different; the voice may be different, and have different characteristics. For example, many screen reader users set them to read out as fast as possible, which sounds odd if you have never heard it before but makes sense if you are accustomed to it. With a self-voicing application, the user may even have to switch off their screen reader, which is most undesirable if they want to use another non-self-voicing application at the same time.

Whether using a screen reader or a self-voicing application, the use of the sense of hearing rather than vision has great implications for the design of the interface. The visual sense, or visual modality, has an enormous capacity for communicating information quickly and easily. If you look at an application on your computer display and you will immediately notice the menus, icons, buttons and other interface controls arrayed about screen. Each represents a function that is available to you, and a quick glance allows you to locate the function you want and immediately activate it with the mouse. Say the application is a word processor: you can go straight from reading the text of your document to any one of the functions offered by the interface. Now imagine that to find the print function you will have to start at the top left-hand corner of the screen and go through each control in turn, wait until its function is described to you, until you find the function you require. Of course, experience blind computer users will not rely on navigating through menus for every function. They will utilise shortcut keys, such as "CTRL-P" to print a document, develop combinations of keystrokes to complete their most common tasks, and learn the location of commonly-used functions in menus and applications. This requires, however, a consistent user interface, where shortcut keys and keystroke combinations can be relied upon to perform the same function each time and menu items are always located in the same place.

The important constraint on the use of computers by blind users is that they rely on hearing, rather than sight. Why is this such a problem? First, blind users are constrained into examining one thing at a time in an order not of their own making - they do not know the structure of things before they explore them. This is the problem with unfamiliar, rich, new interfaces. Second, blind users have to listen to a surprising amount of text to give them the same amount of information as a sighted user might be able to gain in a quick glance. Sighted users might be able to glance through a large document, scanning the chapter and paragraph headings for a key word or phrase, because they can see the headings instantly distinct from the body text and what words they contain. A blind user, even if they can jump from heading to heading, has to wait for the slower screen reader to speak the heading: setting it to read as fast as possible might seem more sensible now.

These two constraints, fixed order of access and time to obtain information, mean that interfaces that rely on hearing must comply with a principle of maximum output in minimum speech. This greatly changes usability: superfluous information is not just a distraction, as a page with lots of links might be for a sighted user, but a real barrier to using the interface. Blind users must not be asked to use a complex interface with many options. If a user misses some output, it will need to be read out again, so an explicit way to repeat things is required. Most importantly, users need control over what is being said: sighted users can move their gaze wherever they want whenever they want, and blind users need some similar control of the focus. Imagine reading something where you can only see one word at a time with no way to go back or forwards. Non-visual interfaces need to provide means to navigate through the document, stop, go back, skip items, repeat and explore the text available. This affects how blind people browse web pages, as we will find out next.

3 Access to the web

So, knowing some of the problems that blind people have with accessing computers in general, how can they access the wonders of the World Wide Web in particular? What are the particular characteristics of browsers and more especially web pages themselves?

Websites vary enormously, but with a quick browse around the most popular sites you will quickly notice a common characteristic: a very heavily visual graphical interface: images, including animated advertising banners; non-linear page layouts, like a newspaper front page with items and indices arranged around the screen; navigation menus and input controls for search functions and user input. And these are simple static items: advanced sites now take advantage of dynamic web page features like whole user interfaces written in Flash. For every Google, applauded for a simple and accessible user interface, there is another website with tabs, buttons, pop-ups and other great features for sighted users.

It is important to realise that not only are web pages full of rich features, but that their arrangement in the pages are completely non-standard. We have described how blind users can use complex graphical applications by the use of hotkeys and learning the user interface. This required a consistent user interface. Surf about some more websites, and you will realise quickly that no such consistent user interface exists for web pages. In face, a single web page can be as rich a user interface as a standalone application. Imagine arriving at an online bookshop's website, with all those images, links, titles and text paragraphs, and having to start at the top left-hand corner and progress one item at a time through the page to find the login to check your last order. No shortcut keys are available for useful functions like "search this website" or "contact the website owner" that might be available on the page. Every website has a different user interface which must be explored and understood to use it, which places great demands on blind users to make the necessary effort. So, how does a blind user start to get to grips with these pages?

The immediate response might be to use the user's screen reader to access a conventional browser like Internet Explorer. This has problems: we know that each application makes different demands on the screen reader, and the heavily-visual and non-standard interfaces of web pages pose considerable difficulties to a screen reader. Navigating the web can be compared to trying to use the largest and most complex application that a blind person will ever attempt. A specific problem with Internet Explorer is that the need to allow the user to move around the document we have described is complicated by the lack of a caret on a web page, an indicator of the position at which you will enter or delete text usually shown as a flashing vertical bar in a text editor. Sighted users can simply glance at a different area to change their focus, but screen reader users need to move the focus of the screen reader to the area of interest, and this is normally done by moving the caret. Browser windows, however, do not have carets - you can only scroll the whole page up and down and look for the text of interest. The only items you can select individually are links or form items. A screen reader could simply choose to read a web page displayed in Internet Explorer from the very top of the page to the bottom, but this would be immensely time-consuming for the user. Tables and frames and forms further complicate a web page. This is not to say that using a screen reader is impossible: advanced screen readers do provide special navigation modes for web pages with a great deal of success. After all, web browsing is one of the common applications which a screen reader developer will try to support. However, complex navigation mechanisms are the result, and whilst these are excellent for experienced and highly skilled users, they are not necessarily ideal for the newly blind user who may be coming to the technology late in life. Web access is a general, not specialist need, and needs to support a general, no n-specialist group of users.

So, why not write a self-voicing web browser? Some have been developed, such as the IBM Homepage Reader. They can be geared to the needs of the user group, although the general problems with self-voicing applications described above apply. The developer, even if they could re-use existing browser and parser technology, has to design a complex new interface for the complex graphical web pages to be browsed. Users will usually have a screen reader already: why make them learn to use a new application, rather than further develop and utilise their existing screen reader skills? Web pages are complex enough and vary in their own interfaces: can we keep the user in familiar territory at least in trying to navigate through these rich structures?

One alternative solution that we propose is to translate the web page content into a 'screen reader-friendly' format. To do this we take a normal graphical web page and strip out superfluous information like decorative images or table-based visual formatting to produce a simpler navigable document that is in accord with the principle of maximum output in minimum speech we established earlier. We can give the user a cursor and let them loose in the translated document, so they have control over what is being read out and they can explore the document on their own terms. The user interface is simplified. The user can use their familiar, trusted screen reader, so the necessary learning curve for getting used to browsing the web through this interface should be less steep. A number of applications based on this theory have been developed, including Franck Audio Data's WebFormator and the Baum Web Wizard. Our version is called WebbIE.

4 WebbIE

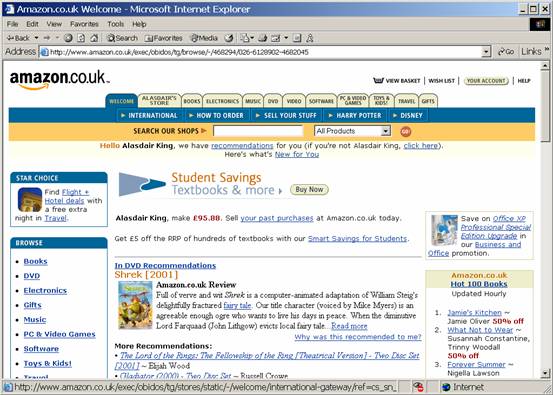

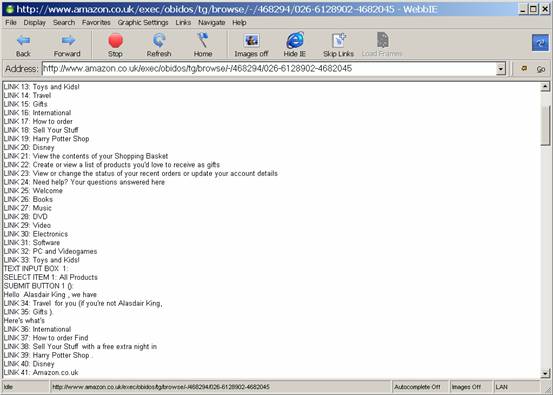

WebbIE re-presents the information from a web page in an accessible format suitable for a screen reader - a panel of plain text (see Figure 1). We did not want to build a browser, so we utilised the Microsoft WebBrowser object, which gives a program its own internal Internet Explorer. This can fetch a web page and parse it into a standard World-Wide-Web Consortium (W3C) document format, which can then be queried by WebbIE for information on the web page. This takes care of the back-end processing and leaves us free to work on the user interface. Since IE is so widespread a browser, we can expect almost every page to support it, which means that we do not have to worry about unsupported web page features. The drawback is that the application will only work on Windows machines with Internet Explorer 5 or higher, but this includes a very significant number of machines.

Of course, we still have to decide what to do with the web page features that have been parsed and provided to us. We use the WebBrowser to obtain information on the links and forms on the page, since they are vital for navigation and use (for example search engines, commerce sites, or database queries). All types of HTML link are supported, including images and image maps. We then obtain the HTML for the body of the page from the WebBrowser and parse it directly, generating a text-only document more like a plain text file than a web page. This allows us to discard images and redundant mark-up, like tables used for visual formatting, while still communicating important structural mark-up characteristics like headers or lists, mostly by using simple new lines for new paragraphs, headings or list items. Following the principle of maximum output in minimum speech, the user can choose what non-text content is displayed, so they can either have image descriptions provided to them or discarded. . The output is plain text, which can be moved around or searched like a text document but retains its vital HTML functionality with forms and links (see Figure 1).

Figure 1: WebbIE in action at Amazon, showing the original website and WebbIE's presentation of it. Note the links (LINK) and the form elements (TEXT INPUT, SELECT ITEM, SUBMIT BUTTON).

Additional navigation features are provided to let the user move a caret around the text, allowing the user to control what their screen reader reads to them. For example, the user can skip over any links to the next piece of text, which comes in useful for those pages that use navigation bars at the top of the page. Filling in forms is done within WebbIE: the user moves the cursor to a form item and hits return. They complete a simple text box and the page is updated with the input for review.

When the user initiates an action that results in an exit from the page, such as clicking on a link to another web page or hitting the submit button on a form, the action is passed back to the WebBrowser object, which processes it as a normal user-generated event and gets the new web page or submits the form depending on the event. WebbIE is updated with the result returned from the website. The user therefore enjoys a fully-functional text-only web browser.

Better still, if plug-ins or support applications are installed, the WebBrowser will trigger their action automatically when their content type is encountered, which means that you can access things like streaming audio from news or radio sites. You can bring up the native Internet Explorer window visible if you want to access the web page directly - for example, to access JavaScript controls or Java applets. The IE favorites are available to use and amend. It also supports secure access to web pages (the SSL protocol), so a blind user can shop safely.

We mentioned that many visually impaired people have some functional vision, and that they can benefit from magnification technology. WebbIE does provide some support for these users by allowing them to increase the font size of the text and change (invert) the colours, but it does not magnify images, so it is not a magnification program per se. Users might find the simpler WebbIE interface to web pages easier to use than scrolling around a magnified but still very complex graphical web page.

5 Problems that WebbIE faces

The problems that WebbIE encounters with some web pages are interesting because illustrate problems that any non-visual web browser will encounter. These can be divided into three sets of problems: how to present web pages to users; poor use of HTML; and inaccessible content.

Presenting web pages to users

The principle of maximum output in minimum speech demands that we decide which mark-up features of HTML are communicated to the user and how they can best be presented. To give an example, HTML defines bulleted lists of items: do we need to communicate to the user that this is a bulleted list, by adding to the text something like "Bullet item:" at the beginning of each line, or do we simply provide a new line? There are six types of heading in HTML, usually displayed with different fonts and sizes in text: does the user need to know the type of their current heading? Is it more important that the structural features of the web page are communicated to the user, or is it better that the user can work through the web page as quickly as possible to find what they want? With WebbIE we have generally gone with the latter approach, so the majority of mark-up features - headings, paragraph breaks or lists for example - are simply presented with new lines. An interesting related problem concerns items that are useful for sighted users, such as navigation bars at the top of each page, but undesirable for blind users, who then are faced with a long list of the links at the top of each page (some sites may have more than 50 such links). WebbIE allows users to skip to the first non-link line, but a more elegant solution might be the mark-up of the navigation bar as a navigation feature that can be skipped unless requested specifically. The W3C provides a mechanism for this in HTML, but few website designers take advantage of it. In general the problem of how to squeeze the output of a rich visual medium through a more restrictive speech-based interface requires compromises and design decisions with no "right" answer. Testing with real-life users is the best solution.

Poor use of HTML

There are three main problems with poor use of HTML, and they are all preventable. The first is especially simple. Images are useless to blind users, but HTML allows for a text description to be applied to an image, called an ALT tag. Most images can be discarded when a web page is presented in WebbIE, but images that are used as links must be somehow communicated to the user to inform them where a link will take them. Adding the ALT tag to the link image allows WebbIE to provide useful information to the user, for example. "Link to catalog". Without the tag the only information that can be provided is the destination URL of the link. More and more sites use server-side processing to produce dynamic pages, which can lead a list of identical links to a web page called something like "script.pl?username=Alasdair". This is most disheartening for a blind user, who will have to find the content they want by trial and error or give up on the site completely. The next two problems are more annoyances than show-stoppers. Frames and the use of tables to provide visual structure can be worked around by WebbIE, but can produce disjointed content when presented to the user solely as text. For example, HTML always describes tables from left to right, row by row. If a designer has intended the layout of items in a table to have a semantic meaning for visual users, for example putting links in one row and descriptions of the links in the row below, they will look attractively lined up for sighted users but a blind user will meet all the links and then all the descriptions with no obvious connection between them. Frames require WebbIE to go and get the frame content separately, and often produce a long list of internal navigation links at the top of every page, but these problems are generally surmountable. More difficult are a few websites that seek to prevent users from accessing frame content directly, and use JavaScript to forcibly reinstate the frames - WebbIE cannot get at the content within the frames, and the user is left with nothing but a description of the frames.

Inaccessible content

The HTML coding problems might be resolved with more attention to accessibility by web designers, but experience suggests that this is optimistic. We will therefore continue to develop WebbIE to handle problematic HTML code. However, the third type of access problem is more fundamental. HTML is essentially a text-based format designed for the presentation of text documents. This is perfect for blind users but not for website designers, who have turned to many alternative technologies to provide active functions to their pages and achieve the desired visual effects. These technologies include Adobe Acrobat files, Java applets, and most recently entire websites presented entirely in Shockwave Flash. Strategies for coping with these largely depend on the extent to which the companies behind these proprietary formats have worked to make them accessible to screen readers. WebbIE users can switch to the Internet Explorer view and access the content if their screen reader can access the format. Embedded objects like Java applets have ALT tags like images, but this does not allow access to the content. (Though since many applets simply provide animation or other visual effects to an image that may be unimportant). A more subtle problem is the use of JavaScript in pages where it replaces the functions normally reserved for HTML, such as submitting forms or linking to another web page. For example, if a designer uses the JavaScript onClick command to create a link rather than the normal HTML tag WebbIE will have great difficulty in identifying and following the link. An accessible interface to the JavaScript functions of a web page would be possible to develop, but whether it would be desirable or even usable by blind users is another matter. New technologies are introduced to the web all the time: all we can ask is that accessibility issues are addressed by their developers.

Alasdair King, February 2004. Last updated June 2008.