I see around the Internet articles about accessibility APIs and standards like MSAA and IAccessible2, and I don’t think they reflect the history of the technology as much as the politics of the technology. This is a quick note of my memory and take on it from my experience working in screenreaders and AT.

Microsoft comes up with the first accessibility API in the late 90s, MSAA. It’s fine for buttons and textboxes and checkboxes, but richer user interfaces are too much for it – the document area in Word, or web pages.

So Microsoft comes up with UI Automation (UIA), which is a richer accessibility API that addresses the shortcomings of MSAA. This was in 2005.

But no-one picks it up properly: within Microsoft, Office doesn’t, I assume because of the usual Office versus Windows internal politics. Internet Explorer doesn’t, because Microsoft has stopped developing Internet Explorer. And outside Microsoft, all the screenreader guys have already written their code to “slurp” the contents of Internet Explorer and Office, so they don’t care that UIA doesn’t become the new standard. (And if UIA fails, so much more value for their products, which cost lots of money and so can support developers to write custom code for each and every program that fails to support accessibility standards…)

Outside Microsoft UIA adoption is even worse: Sun and IBM actually go to the trouble of creating a competing accessibility API, IAccessible2, and foist that on Java and OpenOffice to aid their competition with Microsoft and prevent Microsoft owning the accessibility API on Windows like Microsoft owns the document format standard (Word) and the web page standard (IE). (Disabled users have a history of being used to further other agendas, whether they know it or not…) Of course, nerds rally round and also foist it on Firefox, then the only Internet Explorer alternative, because hey everyone hates Micro$oft, right?

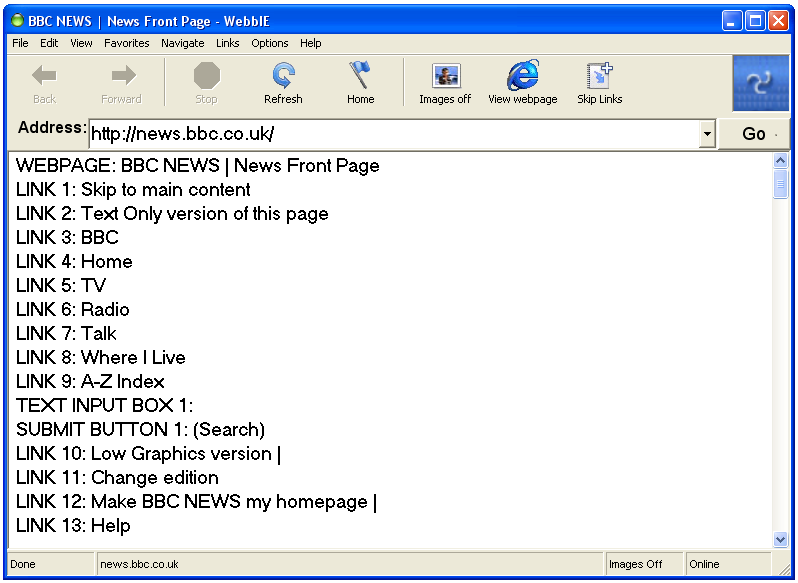

But, it’s important to understand, at this point in history – mid 00s – things are kind of OK. JAWS and NVDA and other screenreaders write special code (accessing some kind of application-specific API like the Word DOM) to talk to really really important applications, like Internet Explorer and Office, and use the MSAA accessibility API to talk to everything else. We all argue about the price of screenreaders and whether videos should autoplay but everything is stable. If you want to use more applications with your screenreader you pay more for a screenreader with more special code – JAWS is the standard, of course.

Then it all changes. The web and mobile explodes, by which we mean HTML5 and Chrome and iOS breaking the Internet Explorer/Windows dominance, and websites becoming applications in their own right like Google Docs or Facebook. Internet Explorer is dead, the mainstream declares, which means the sophisticated support screenreaders have developed to talk to its application-specific APIs is all useless: at the same time the websites are getting more and more complex. MSAA isn’t going to cut it, and there is no application-specific API for Chrome, the new dominant browser (there is a chrome.automation in development – how nice!) And here we are in 2018, and it’s much harder to use a web app than a desktop application. (Of course, you’ve probably jumped ship to iOS and an iPad with VoiceOver, or you are using a restricted set of desktop applications…)

Hey, maybe UI Automation’s time has come! Everyone knows VoiceOver is super-accessible and great and built into macOS, and so Microsoft needs a better AT story round Office and Windows, so the newly-invigorated Microsoft builds UIA into Office and Edge and here we go! At least until Edge is killed off too…

(The usual caveats and apologies about focusing mainly on blind people apply: showing my history in that area.)